The hum of the modern world is not the sound of traffic or the chatter of crowds; it is the low, steady drone of cooling fans in data centers. For decades, this sound has been the heartbeat of the internet, a necessary byproduct of keeping the silicon brains of our civilization from melting down. But as we stand on the precipice of a new era defined by artificial intelligence, high-performance computing (HPC), and the insatiable demand for data, that hum is becoming a roar—and a liability.

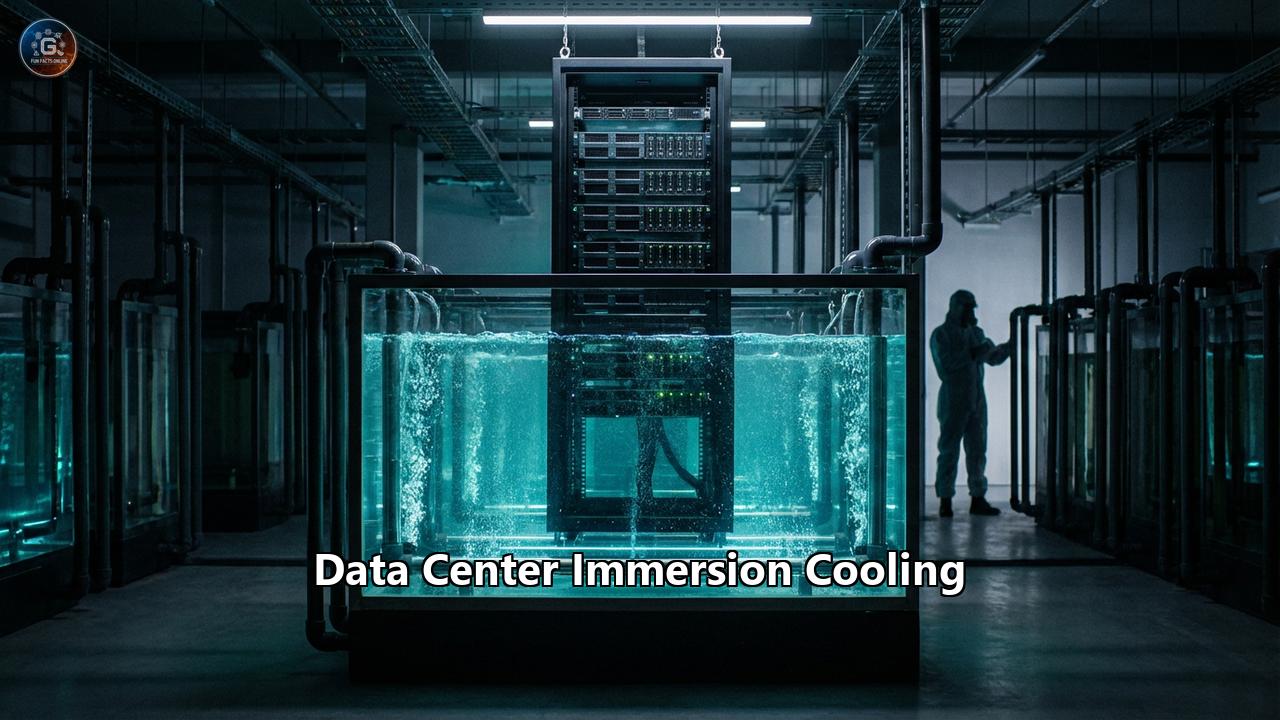

The traditional method of cooling servers by blowing cold air over hot components is reaching its physical and economic limits. Moore’s Law is pushing transistor densities to the atomic scale, and the resulting thermal density is outpacing the capacity of air to carry heat away. Enter immersion cooling: a technology that sounds like science fiction but is rapidly becoming the gold standard for next-generation digital infrastructure. By submerging sophisticated electronics into baths of non-conductive liquid, we are not just cooling computers; we are reimagining the very architecture of the data center.

This article explores the comprehensive landscape of data center immersion cooling. We will journey through the physics of heat transfer, the engineering marvels of single and two-phase systems, the chemical revolution of dielectric fluids, and the real-world case studies that are proving the technology’s viability. We will also confront the challenges of adoption, from the complexities of retrofitting to the safety standards defining this new frontier, and look ahead to a future where data centers are silent, sustainable, and powerful beyond today’s imagination.

The Thermal Crisis: Why Air is Failing

To understand the rise of immersion cooling, one must first appreciate the failure of air. For fifty years, the data center industry has relied on a simple premise: conditioning the air in a room to keep servers cool. This involves massive chillers, Computer Room Air Conditioning (CRAC) units, raised floors, and hot/cold aisle containment systems. It is an ecosystem built around moving vast volumes of air to strip heat away from small, hot surfaces.

However, air is a poor conductor of heat. Its thermal conductivity is low, and its capacity to hold heat is limited. As chip manufacturers like NVIDIA, AMD, and Intel push thermal design power (TDP) per chip beyond 400W, 700W, and even 1000W, the airflow required to cool these components becomes hurricane-like. The energy needed just to spin the fans can consume 20% or more of a server’s total power.

Furthermore, air cooling imposes a hard limit on density. In a standard air-cooled rack, you can typically manage 15-20 kW of power before you create "hot spots" that threaten hardware stability. To cool a 100 kW AI cluster with air requires spacing out the racks, wasting valuable real estate and increasing the length of cabling, which introduces latency.

The industry has hit a thermal wall. The solution lies in a fundamental shift in physics: moving from a gas (air) to a liquid medium.

The Physics of Immersion: A superior Medium

Liquid is approximately 1,200 times more effective at removing heat than air. This is the foundational fact that makes immersion cooling not just an alternative, but an inevitability for high-density computing. Water, the most efficient common coolant, is conductive and corrosive, making it dangerous to electronics if it touches them directly. This is why "Direct-to-Chip" (DTC) liquid cooling uses metal cold plates and pipes to keep the water separate from the silicon.

Immersion cooling takes a different approach. It uses dielectric fluids—liquids that do not conduct electricity. Because these fluids are electrically insulating, you can safely submerge an entire server—motherboard, CPU, GPU, memory, power supply, and cabling—directly into the liquid.

This creates a thermal environment of unparalleled efficiency. Every component is in direct contact with the coolant. There are no hot spots because the fluid fills every nook and cranny. The massive heat sinks required for air cooling are removed, replaced by the natural thermal mass of the fluid. The screaming fans are gone. The data center becomes silent, dense, and thermally stable.

The Two Paths: Single-Phase vs. Two-Phase Immersion

The world of immersion cooling is divided into two primary schools of thought, each with its own mechanics, benefits, and champions.

1. Single-Phase Immersion Cooling

In a single-phase system, the dielectric fluid stays liquid throughout the entire cooling cycle. It never boils.

- How it works: Servers are lowered vertically into a tank filled with a hydrocarbon-based fluid (often similar to mineral oil or synthetic variations). The heat from the components warms the fluid, which rises naturally via convection or is pumped out of the tank. The warm fluid flows through a Coolant Distribution Unit (CDU)—essentially a heat exchanger—where it transfers its heat to a separate water loop (the facility water). The cooled fluid is then pumped back into the tank.

- The Fluid: These fluids have high boiling points. They are viscous, oily substances that are excellent at retaining heat. Leading examples include synthetic fluids from major petrochemical companies like Shell and Castrol, as well as bio-based options from Cargill.

- Pros: It is mechanically simpler, easier to contain (less prone to leaking as vapor), and the fluids are generally cheaper and longer-lasting. It is also quieter and requires less maintenance than two-phase systems.

- Cons: It is slightly less efficient at removing extreme heat flux compared to boiling fluids. Dealing with "oily" servers can be messy for technicians during maintenance.

2. Two-Phase Immersion Cooling

Two-phase cooling is the "Ferrari" of immersion—higher performance, higher cost, and higher complexity.

- How it works: The electronic components are submerged in a specially engineered fluorochemical fluid with a low boiling point (often around 50°C or 122°F). When the chips heat up, the fluid boils directly on the surface of the component. This phase change—from liquid to gas—absorbs a massive amount of energy (latent heat of vaporization). The vapor rises to the top of the sealed tank, hits a condenser coil (cooled by water), turns back into liquid, and "rains" back down into the bath.

- The Fluid: Historically dominated by 3M’s Novec line (more on this later), these fluids are typically fluorinated compounds (PFAS). They are thinner than water, non-oily, and evaporate instantly, leaving components dry.

- Pros: Unmatched heat rejection capability. It can handle extreme chip densities (100kW+ per rack) with ease. The boiling action creates natural turbulence that scrubs heat away from the chip surface.

- Cons: The fluids are incredibly expensive (hundreds of dollars per gallon). The tanks must be hermetically sealed to prevent the expensive gas from escaping. The environmental concerns regarding PFAS are leading to regulatory bans and phase-outs, complicating the supply chain.

The Great Fluid Transition: Life After Novec

A critical turning point in the immersion cooling narrative occurred in late 2022, when 3M announced it would cease manufacturing PFAS (per- and polyfluoroalkyl substances) by the end of 2025. This included their ubiquitous Novec fluids, which were the industry standard for two-phase cooling.

This sent shockwaves through the market but also spurred a wave of innovation. The industry is now pivoting toward sustainable, PFAS-free alternatives.

- Hydrocarbons & Synthetic Oils: For single-phase systems, the market is robust. Companies like Shell (with their Gas-to-Liquid S5 X fluid), Castrol (ON product line), and ExxonMobil have developed highly refined synthetic fluids that offer excellent thermal properties without the environmental baggage.

- Bio-Based Fluids: Cargill has introduced NatureCool, a plant-based dielectric fluid. Derived from vegetable oils (soybean), it is over 90% renewable, biodegradable, and carbon-neutral. This appeals strongly to hyperscalers with aggressive sustainability goals.

- Next-Gen Fluorinated Fluids: For two-phase applications, the search is on for "drop-in" replacements that mimic the boiling properties of Novec but without the persistence in the environment. Companies like Chemours and Honeywell are developing hydrofluoroolefins (HFOs) that break down in the atmosphere in days rather than millennia, offering a regulatory-compliant path for high-performance two-phase cooling.

Case Studies: Pioneers of the Deep

The theory is sound, but the application is where the revolution is verified. Several major players have moved beyond proof-of-concept into production.

Alibaba Cloud: The Scale of InnoChill

Alibaba Cloud has been a global trailblazer. Facing the massive compute demands of China's digital economy, they deployed their "InnoChill" single-phase immersion cooling technology at scale. In their Hangzhou data center, they achieved a Power Usage Effectiveness (PUE) of 1.05—stunningly close to the perfect 1.0. By eliminating fans and air conditioning, they reported energy savings of 45% compared to traditional data centers. This is not a small experiment; it is a hyperscale deployment proving that immersion can support massive AI and big data workloads reliably.

Microsoft: From Under the Sea to the Desert

Microsoft’s journey began with Project Natick, where they sank a data center off the coast of Scotland. They discovered that the nitrogen-filled, stable temperature environment significantly reduced hardware failure rates. They brought this logic on land to Quincy, Washington, where they became the first major cloud provider to run a production two-phase immersion cooling system. They found that the stable thermal environment allowed them to overclock servers (run them faster than rated) without overheating, effectively getting more compute power out of the same silicon.

Wyoming Hyperscale: The Sustainable Blueprint

In a bold move, Wyoming Hyperscale White Box has designed a facility from the ground up to be "air-cooling free." Partnering with Submer (a leading immersion tank manufacturer), they are utilizing single-phase immersion to cool high-density racks. Crucially, they are capturing the waste heat—which in liquid form is much easier to transport than hot air—and using it to heat a nearby indoor vertical farm. This "industrial symbiosis" turns the data center from a waste-generator into a heat-provider, closing the sustainability loop.

Bitfury & Allied Control: The Crypto Crucible

Before AI took the spotlight, cryptocurrency mining was the driver of high-density cooling. Bitfury, through its acquisition of Allied Control, deployed massive 40MW+ two-phase immersion cooling mines in the Republic of Georgia. These facilities proved that you could run chips at extreme intensities (250kW per rack) in hot climates without massive air conditioning bills. They demonstrated that immersion cooling could allow data centers to be built in locations previously thought too hot or lacking in water for traditional cooling.

The Operational Reality: Robots, Cranes, and Drips

Adopting immersion cooling changes the daily life of a data center technician. You can no longer simply walk up to a rack and hot-swap a hard drive.

- Tank Architecture: Instead of vertical racks with doors, immersion data centers look like rows of chest freezers. These "tanks" or "pods" hold the fluid and the servers (which are inserted vertically from the top).

- Maintenance: Servicing a server requires lifting it out of the fluid. In single-phase systems, the server is dripping with oil. Technicians use service rails and drip trays to let the fluid run back into the tank. While modern fluids are non-toxic, they are slippery. "Hot swapping" requires specific procedures to ensure fluid integrity.

- The Rise of Automation: Because lifting heavy, oil-soaked servers is ergonomically difficult for humans, immersion cooling is driving the adoption of robotic maintenance. Integrated gantry cranes and robotic arms can lift servers out of tanks, place them in service cradles, or even swap components, reducing the risk of human error or injury.

Safety and Standardization: The OCP Factor

For years, a lack of standards held immersion cooling back. Every vendor had a different tank size, fluid spec, and warranty policy. This is changing rapidly, driven by the Open Compute Project (OCP).

The OCP’s Immersion Cooling Project has released guidelines that are becoming the industry bible. They cover:

- Fluid Harmony: Defining material compatibility to ensure the fluid doesn't dissolve the seals, glues, or labels on the servers.

- Tank Specs: Standardizing dimensions so that servers can be interoperable between different tank vendors.

- Safety (UL/IEC 62368-1): The 4th edition of this safety standard now includes specific annexes for liquid-filled components. It addresses risks like fluid displacement (overflow), pressure buildup, and flammability (requiring high flash points for single-phase fluids).

These standards are crucial for "warranty capability." In the past, dipping a server in oil voided the warranty. Now, major OEMs like Dell, HPE, and Supermicro offer servers specifically designed and warrantied for immersion, often stripping off the useless fans and air shrouds at the factory.

The Future: A Silent, Dense Horizon

As we look toward 2030, the trajectory is clear. The explosion of Generative AI is creating racks that draw 100kW or more. Air cooling simply cannot physically handle this density. We are seeing a bifurcation in the market:

- Legacy Enterprise: Standard business apps will likely remain on air-cooled infrastructure for some time.

- AI & HPC Core: The "brains" of the internet—training clusters for LLMs, scientific simulation, and high-frequency trading—will move almost exclusively to liquid cooling, with immersion taking the highest-density share.

We will see the rise of "Dark" Data Centers—lights-out facilities designed for immersion tanks and robotic servicing, optimized purely for efficiency rather than human occupancy. We will see data centers integrated into district heating networks in Europe and North America, where the 50°C water output from immersion tanks heats thousands of homes, turning the digital cloud into physical warmth.

Immersion cooling is no longer a niche experiment for crypto miners. It is the necessary evolution of the data center. It is the technology that will allow us to continue scaling our digital ambitions without cooking the planet. The hum of the fans is fading, replaced by the silent efficiency of the fluid. The age of immersion has arrived.

Deep Dive: The Comprehensive Guide to Data Center Immersion Cooling

Chapter 1: The Thermodynamics of Necessity

To understand why the industry is turning to liquid, we must look at the thermal wall we have hit.

The Power Density ProblemFor years, the average rack density in a data center was 5-8 kW. A standard raised-floor air system could handle this easily. But modern AI hardware has changed the game. An NVIDIA H100 GPU has a TDP of up to 700W. A server might hold 8 of them. A single rack of these servers can exceed 40kW, and clustered "SuperPODs" are pushing towards 100kW per rack.

Air is a gas. Its molecules are far apart. To remove 100kW of heat with air, you need to move a volume of air so massive it creates acoustic issues (damaging hard drives) and requires enormous energy.

Liquid vs. Air- Thermal Conductivity: Water is ~24x more conductive than air. Dielectric fluids vary but are significantly better than air.

- Specific Heat Capacity: Liquid can "hold" 1000x more heat energy per unit volume than air.

- This means a slow-moving stream of liquid can remove the same amount of heat as a screaming hurricane of air.

Chapter 2: The Technology Stack

Single-Phase Immersion (1-PIC)

- The Tank: Often looks like a horizontal chest freezer. It contains the fluid and the busbars for power.

- The Coolant Distribution Unit (CDU): This is the heart of the system. It contains pumps and a heat exchanger (usually plate-and-frame). It circulates the dielectric fluid from the tank, runs it past a plate cooled by facility water, and returns it.

- Natural vs. Forced Convection: Some 1-PIC systems rely on the natural rise of hot fluid. Others use baffles and directed flow (forced convection) to ensure fresh cool fluid hits the high-power chips (CPUs/GPUs) first.

Two-Phase Immersion (2-PIC)

- The Sealed Tank: The tank must be vapor-tight.

- The Boiling Process: The fluid (e.g., a fluorinated ketone) has a boiling point of ~50°C. When the chip hits this temperature, the fluid boils. The phase change (liquid to gas) absorbs massive energy without the temperature rising further. This keeps chips at a rock-steady temperature.

- The Condenser: Coils running cold water sit at the top of the tank ("headspace"). The rising vapor hits these coils, condenses back to drops, and falls.

- Zero Pumps (Internal): Inside the tank, the movement is passive. No pumps are needed to move fluid across the chips; physics does the work.

Chapter 3: The Fluid Landscape & Environmental Impact

The Fall of Novec & The Rise of Alternatives3M’s Novec was the miracle fluid for 2-PIC. It was non-flammable, non-toxic, and efficient. However, it is a PFAS "forever chemical." With 3M exiting the market, the industry faced a crisis.

- The Solution: New fluids are emerging. Chemours Opteon and Honeywell Solstice offer low-GWP (Global Warming Potential) alternatives based on HFO chemistry. These break down in the atmosphere in days, not years.

The shift to single-phase is often driven by sustainability. Cargill's NatureCool 2000 is a prime example. Made from soy, it is:

- Carbon Neutral (plants absorb CO2 while growing).

- Biodegradable (>99% in 28 days).

- High Flash Point (>300°C), making it incredibly safe against fire.

Traditional data centers consume millions of gallons of water for evaporative cooling towers (to cool the air). Immersion cooling uses a closed loop. The facility water can be run through dry coolers (radiators) because the return temperature is high enough that outside air can cool it even in summer. This can reduce water consumption by 95-100%, a critical metric for data centers in drought-stricken areas like Arizona or Spain.

Chapter 4: Implementing Immersion

Retrofit vs. Greenfield- Greenfield (New Build): This is ideal. You don't need raised floors. You don't need massive air handlers (CRAHs). You can pack racks tighter. The building shell is smaller and cheaper (CapEx savings of ~15-20%).

- Retrofit: This is harder. The tanks are heavy. A standard raised floor might not support the weight of a tank filled with 1000 liters of fluid. Floors often need reinforcement. You also need to re-plumb the facility to bring water to the racks, rather than air.

You can't just dunk any server.

- PVC: Many cables use PVC jackets. Over years, warm oil can extract the plasticizers, making the cables brittle and the oil contaminated.

- Thermal Paste: Standard thermal paste can wash away. Immersion-ready servers use Indium foil or specialized TIMs (Thermal Interface Materials).

- Labels: Paper labels disintegrate. Laser etching or specialized polymer labels are required.

How do you fix a server?

- Lift: Use a lift assist (integrated or mobile) to pull the server vertically.

- Drain: Hang it on the "service rail" for 30-60 seconds.

- Service: If it's a 1-PIC system, the server is oily. Technicians need gloves and a "prep area" with stainless steel tables.

- Clean: If the component needs to be sent back to the manufacturer, it must be degreased (often using an ultrasonic cleaner).

Chapter 5: The Economic Argument (ROI)

CapEx (Capital Expenditure)- Tanks: Expensive compared to a metal rack.

- Fluid: A significant upfront cost (thousands of dollars per tank).

- Infrastructure: CHEAPER. No chillers, no raised floors, smaller building.

- Net Result: On a MW basis, greenfield immersion is often cost-neutral or cheaper than Tier 3 air cooling.

- Cooling Energy: Reduced by 90-95%. This drops the total facility energy bill by 30-40%.

- Hardware Lifespan: Hardware runs at a steady temperature. No thermal cycling (heating up/cooling down) which kills electronics. No dust. No vibrations from fans. Servers can last 20-30% longer.

- Density: You get more compute per square foot, increasing the revenue potential of the building.

Chapter 6: Safety & Regulatory Compliance

Fire SafetyMineral oils can burn. However, fluids like Cargill’s FR3 or NatureCool have flash points above 300°C. They are classified as "K-class" fluids (difficult to ignite). This often simplifies fire suppression requirements compared to traditional rooms.

The Standards- UL 62368-1 Edition 4: The regulatory gold standard. It mandates tests for material compatibility, pressure relief, and leakage containment.

- OCP Guidelines: The Open Compute Project provides the "best practices" that most hyperscalers follow. If a tank is "OCP Ready," it means it accepts standard 21-inch or 19-inch OCP servers and meets safety specs.

Chapter 7: Future Outlook - The Era of Heat Reuse

The most exciting frontier is not just cooling, but energy reuse.

In an air-cooled data center, the waste air is 35°C (95°F). This is "low-grade" heat. It's hard to use for anything other than warming the room itself.

In an immersion tank, the return liquid can be 50°C - 60°C (122°F - 140°F). This is "high-grade" heat.

Real World Application: Helsinki, FinlandMicrosoft and the utility company Fortum are building a system where waste heat from new data centers will provide 40% of the district heating for the cities of Espoo and Kauniainen. The hot water from the data center is pumped into heat pumps, boosted slightly, and sent into the city's pipe network to heat homes. This turns the data center into a massive, digital boiler.

Conclusion

Data Center Immersion Cooling is a convergence of physics, engineering, and sustainability. It solves the immediate problem of high-density AI compute while offering a path to a greener future. While the transition requires new skills, new supply chains, and a shift in mindset, the economics and physics are undeniable. As we build the intelligence of tomorrow, we will do it in the quiet, efficient depths of dielectric fluid. The revolution is liquid.

Reference:

- https://collateral-library-production.s3.amazonaws.com/uploads/asset_file/attachment/37697/Data_Centers-Info_sheet_for_immersion_cooling_Final.pdf

- https://peasoup.cloud/iaas/design-and-implementation-considerations-for-liquid-immersion-cooling-in-data-centres/

- https://www.akcp.com/index.php/2021/08/10/different-approaches-to-immersion-cooling/

- https://www.connectortips.com/how-do-the-ocp-immersion-cooling-requirements-effect-connectors/

- https://cc-techgroup.com/immersion-cooling/

- https://submer.com/blog/open-bath-immersion-cooling/

- https://www.oreateai.com/blog/navigating-the-shift-finding-alternatives-to-3ms-fluorinert-and-novec-fluids/98ab8c13e7be556ce241c3fd61ccd454

- https://eureka.patsnap.com/report-retrofit-strategies-for-existing-data-centers-to-immersion-cooling

- https://unistarchemical.com/blog/3mtm-novectm-replacement-fluids-drop-in-alternatives-to-avoid-supply-chain-disruptions

- https://www.cargill.com/bioindustrial/dielectric-and-cooling/dielectric-and-cooling-applications

- https://www.feedandgrain.com/home/news/15384836/cargill-launches-renewable-electronics-cooling-fluid

- https://www.powersystems.technology/cargill-bioindustrial-to-launch-first-ever-plant-based-dielectric-fluid-for-immersion-cooling-transformer-technology-news/

- https://datacentremagazine.com/articles/cargill-launches-plant-based-dielectric-data-centre-cooling

- https://www.youtube.com/watch?v=gEeMfSVOKOs

- https://immersioniq.io/implementation-realities-immersion-challenges-solutions-and-best-practices/

- https://jinzhengchemical.com/news/127.html

- https://www.sciencespo.fr/chair-sustainable-development/news/the-heat-is-on-and-how-to-reclaim-it-industrial-symbiosis-for-data-centers/

- https://www.besttechnologyinc.com/3m-novec-engineered-fluids-solvents/3m-novec-hfe-engineered-fluids-replacements-and-pfas-phase-out/

- https://146a55aca6f00848c565-a7635525d40ac1c70300198708936b4e.ssl.cf1.rackcdn.com/images/af0fe1b35c6557e9c9ab58c60120af3af4d3b3fb.pdf

- https://blog.aquatherm.de/en/using-waste-heat-from-data-centres-turning-digital-heat-into-community-warmth

- https://people.computing.clemson.edu/~mark/3512582.pdf